Vision+: Enhancing Medical Imaging with AI

During my studies in Computer Science at King Abdulaziz University (KAU), I led a capstone project with my teammates Muhanna Al-Muhanna and Osama Abdulaziz Al-Dosari. Together, we built Vision+, an AI-powered DICOM viewer that enhances low-dose CT scans, aiming to improve image clarity and assist radiologists in making more accurate diagnoses.

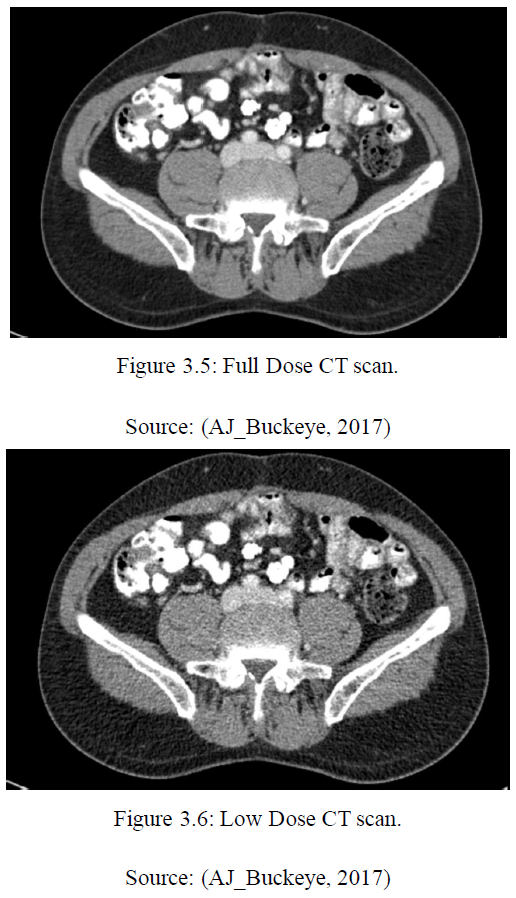

The Problem: Low-Dose CT Scans and Image Noise

CT scans are essential for diagnosis, but reducing radiation exposure (using Low-Dose CT) often leads to noisy, lower-quality images. This noise can obscure critical details, making diagnosis more difficult. Our goal was to enhance these low-dose images without increasing radiation, helping doctors get the best of both worlds: safer scans and clearer results.

Our Solution: AI-Enhanced DICOM Viewer

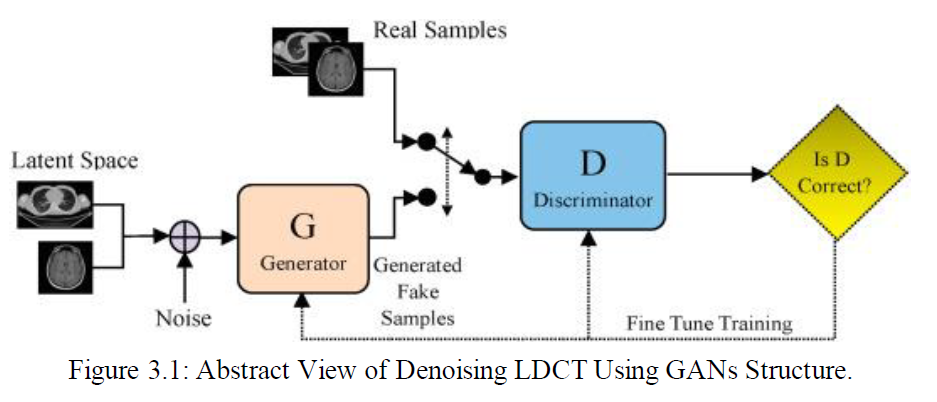

We created a smart, web-based DICOM viewer that automatically denoises low-dose CT images using AI. At its core is a Conditional Generative Adversarial Network (cGAN) trained to transform noisy images into clearer ones.

How the AI Works

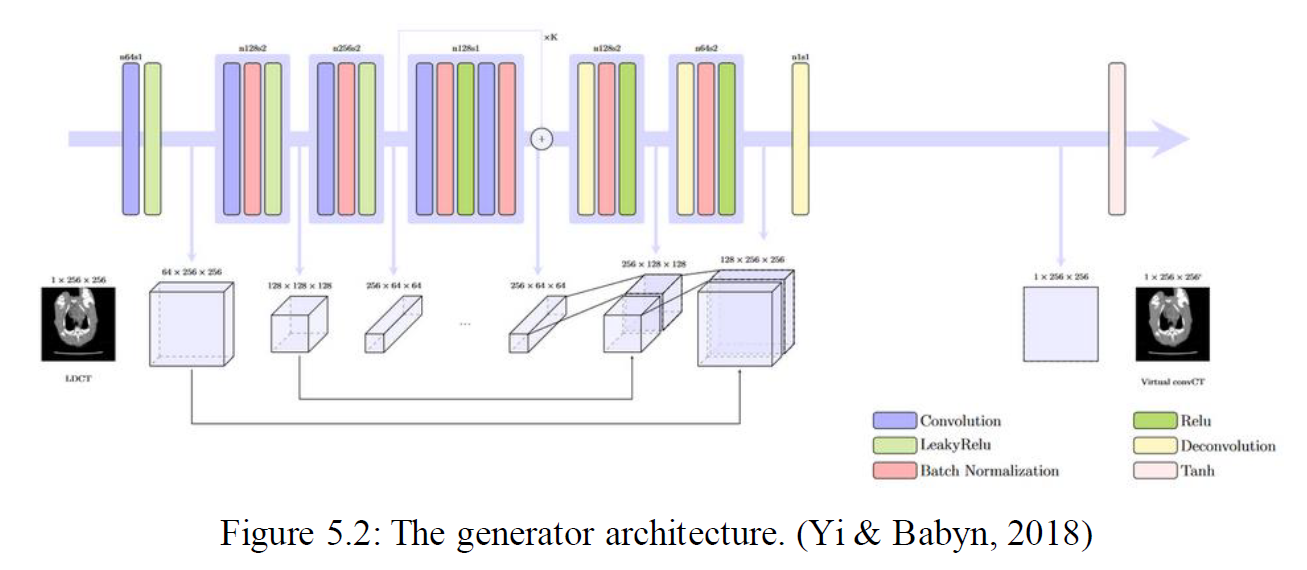

We used a cGAN architecture composed of two neural networks:

- Generator (U-Net-based): Takes a noisy CT scan and produces a cleaned-up version.

- Discriminator (PatchGAN): Tries to distinguish real high-quality images from those generated by the model.

By training both networks together, the generator learned to produce realistic, denoised images that closely resemble normal-dose scans.

### Dataset

### DatasetWe trained our model using the 2016 NIH-AAPM-Mayo Clinic Low Dose CT Grand Challenge dataset, which includes both full-dose and simulated quarter-dose CT scans for 10 anonymous patients. The model learned to map noisy images to their clear counterparts using this paired data.

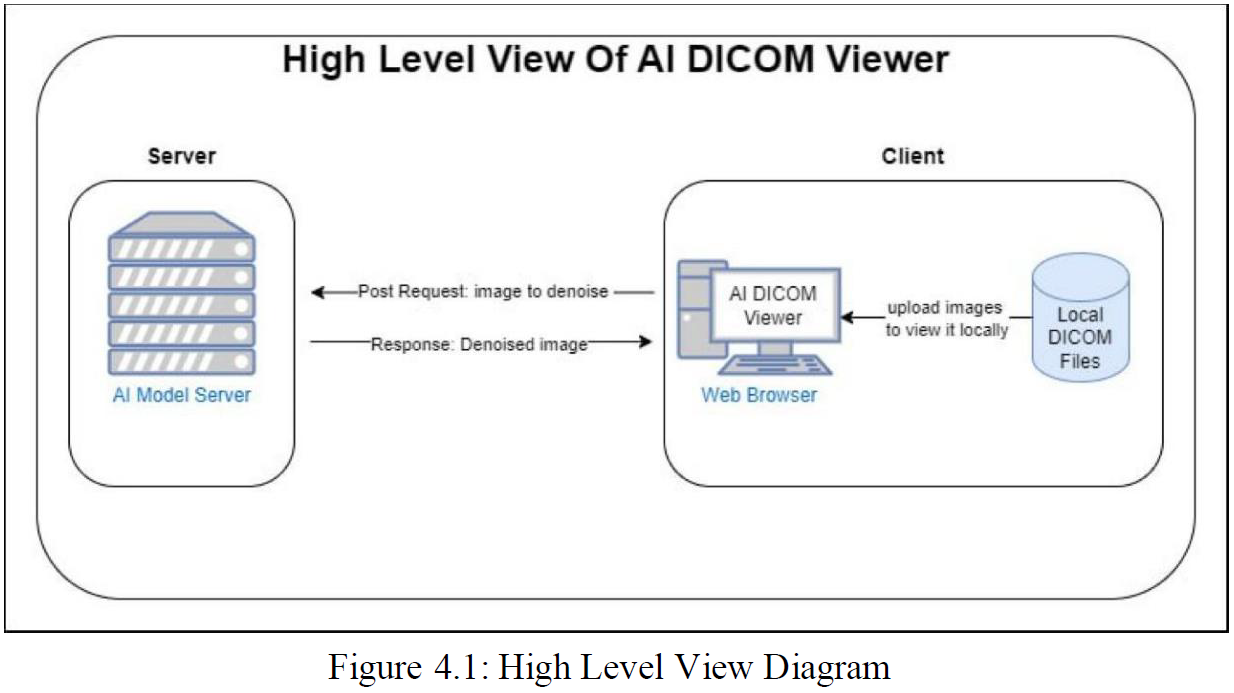

The Application: Web-Based Viewer

We built a full-stack application to make our AI accessible and user-friendly for medical professionals:

- Frontend: Developed in ReactJS using CornerstoneJS for interactive DICOM rendering.

- Backend & AI: The denoising model was implemented in Python with TensorFlow and deployed on a server to handle inference.

Results

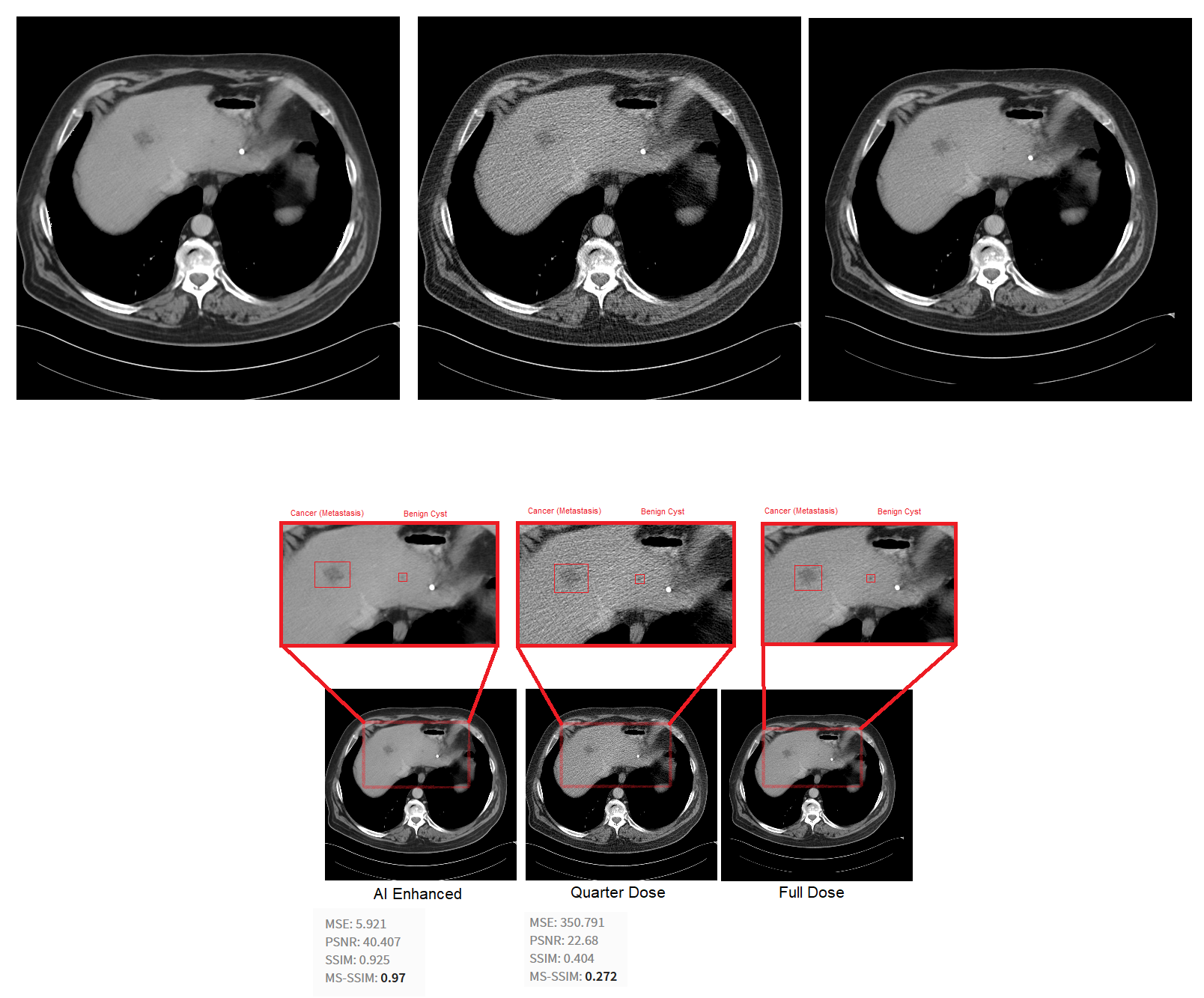

Clearer Images, Better Diagnostics

The AI-enhanced scans were significantly sharper than the originals. Key diagnostic features—like tumors in liver scans—became easier to detect after denoising.

### Quantitative Performance

### Quantitative Performance- PSNR (Peak Signal-to-Noise Ratio): Improved from ~22.68 to 40.41

- SSIM (Structural Similarity Index): Reached 92.5%, indicating strong structural preservation

These metrics confirm the model’s ability to reduce noise while maintaining important image details.

Why It Matters

Vision+ has the potential to:

- Improve patient safety by supporting lower radiation protocols

- Support radiologists with clearer visuals and improved workflow

- Enable earlier detection of subtle conditions

- Lay the groundwork for future AI-assisted diagnosis tools

What’s Next

This project was a solid proof-of-concept with plenty of room to grow. Possible next steps include:

- Adding features like automatic segmentation and tumor detection

- Improving the model with newer architectures and larger datasets

Working on this project reinforced my passion for using AI in healthcare and gave me practical experience building a real-world, AI-powered tool. I’m excited to keep building on this foundation.